If you’re new to ZFS you probably want to start here

Intro

ZFS RAID is not your backup

Besides being a fantastic alternative to hardware RAID ZFS provides other useful features. However, while features are neat all that really matters is what problems they solve. While RAID solves data loss in the instance of disk failure it does not protect your data from many other catastrophes. It is never safe to consider RAID as your backup solution, even if it is ZFS with scrubbing.

ZFS Snapshots and clones are powerful, it’s awesome

The problems that ZFS snapshots can solve are probably not as mind blowing today as they were when I first learned about them in 2010 but even today I am still impressed

| # | Problem | Pro-active steps | Recovery cost | Traditional method |

| 1 | File system corruption | Take a snapshot | Copy uncorrupted data from snapshot or clone | Restore from backup |

| 2 | Human error (deleted or overwritten data) |

Take a snapshot | Copy correct version of file from snapshot or clone | Restore from backup |

| 3 | Live backup of running VM | Take a snapshot (You’ll want write-cache off for this) |

Make a clone (takes less than 1 minute) and run VM from new clone |

Stop VM or use scripts/tools to perform backup |

| 4 | Create test environement | Take a snapshot and make a clone of it |

Clone only takes up as much space as is newly written in test. Delete the clone whenever you want. |

Full duplication of production system |

There are other applications but the above are the ones I’ve found most useful.

My demo setup

I’m using the same VM I setup for ZFS Basics which has the following specs:

| Variable | Details |

| OS | Ubuntu 14.04 LTS |

| ZFS | ZoL (http://zfsonlinux.org/) version 0.6.3 for Ubuntu |

| CPU | 4 VM cores from an i7-2600 3.4Ghz |

| MEM | 4 GB of VM ram from DDR3 host memory |

| NIC | 1x1Gbe |

| Disks | 1 30Gb OS disk and 8 1Gb disks for ZFS example |

An example of snapshots on a ZFS files sytem

Working with Snapshots

Overview of snapshots

ZFS snapshots are incredibly easy to work with and understand. ZFS is a copy on write — COW — file system which means that that we’re only writing when and where we need to. When you take a ZFS snapshot you are creating a read only version of the data you “snapshot” that you can always access as it was in that specific moment. Because snapshot data is not re-written or stored in another place it takes up almost no time or space. However, the longer a snapshot lives and becomes less out of sync with its’ parent the more space it may take up as it could hold data that no longer exists elsewhere.

Example of working with snapshots

Let’s say I have a dataset called “pool/data”

root@zfs-demo:/# zpool status

pool: pool

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

pool ONLINE 0 0 0

raidz3-0 ONLINE 0 0 0

01 ONLINE 0 0 0

02 ONLINE 0 0 0

03 ONLINE 0 0 0

04 ONLINE 0 0 0

05 ONLINE 0 0 0

06 ONLINE 0 0 0

07 ONLINE 0 0 0

08 ONLINE 0 0 0

errors: No known data errors

root@zfs-demo:/# zfs list

NAME USED AVAIL REFER MOUNTPOINT

pool 296K 4.81G 67.1K /pool

pool/data 64.6K 4.81G 64.6K /pool/data

root@zfs-demo:/#Generate some data we want to “protect”

You can see above that pool/data currently takes up 64k and we have 4.81G left. Now let’s put some data on it that we can protect and ruin.

root@zfs-demo:/# cd /pool/data root@zfs-demo:/pool/data# wget http://winhelp2002.mvps.org/hosts.txt --2014-09-14 23:30:48-- http://winhelp2002.mvps.org/hosts.txt Resolving winhelp2002.mvps.org (winhelp2002.mvps.org)... 216.155.126.40 Connecting to winhelp2002.mvps.org (winhelp2002.mvps.org)|216.155.126.40|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 511276 (499K) [text/plain] Saving to: ‘hosts.txt’ 100%[==============================================================================================================================================================>] 511,276 959KB/s in 0.5s 2014-09-14 23:30:48 (959 KB/s) - ‘hosts.txt’ saved [511276/511276] root@zfs-demo:/pool/data# ls -al total 214 drwxr-xr-x 2 root root 3 Sep 14 23:30 . drwxr-xr-x 3 root root 3 Sep 14 23:22 .. -rw-r--r-- 1 root root 511276 Aug 20 14:15 hosts.txt

So we’ve downloaded the most recent MVP hosts file. Let’s take a peak at the first 50 lines of of our new file.

root@zfs-demo:/pool/data# head hosts.txt -n 50 # This MVPS HOSTS file is a free download from: # # http://winhelp2002.mvps.org/hosts.htm # # # # Notes: The Operating System does not read the "#" symbol # # You can create your own notes, after the # symbol # # This *must* be the first line: 127.0.0.1 localhost # # # #**********************************************************# # --------------- Updated: August-20-2014 ---------------- # #**********************************************************# # # # Disclaimer: this file is free to use for personal use # # only. Furthermore it is NOT permitted to copy any of the # # contents or host on any other site without permission or # # meeting the full criteria of the below license terms. # # # # This work is licensed under the Creative Commons # # Attribution-NonCommercial-ShareAlike License. # # http://creativecommons.org/licenses/by-nc-sa/4.0/ # # # # Entries with comments are all searchable via Google. # 127.0.0.1 localhost ::1 localhost #[IPv6] # [Start of entries generated by MVPS HOSTS] # # [Misc A - Z] 0.0.0.0 fr.a2dfp.net 0.0.0.0 m.fr.a2dfp.net 0.0.0.0 mfr.a2dfp.net 0.0.0.0 ad.a8.net 0.0.0.0 asy.a8ww.net 0.0.0.0 static.a-ads.com 0.0.0.0 abcstats.com 0.0.0.0 ad4.abradio.cz 0.0.0.0 a.abv.bg 0.0.0.0 adserver.abv.bg 0.0.0.0 adv.abv.bg 0.0.0.0 bimg.abv.bg 0.0.0.0 ca.abv.bg 0.0.0.0 www2.a-counter.kiev.ua 0.0.0.0 track.acclaimnetwork.com 0.0.0.0 accuserveadsystem.com 0.0.0.0 www.accuserveadsystem.com 0.0.0.0 achmedia.com 0.0.0.0 csh.actiondesk.com 0.0.0.0 ads.activepower.net 0.0.0.0 app.activetrail.com

Taking a snapshot of our important data

Let’s consider this data we want to protect in its’ current state. We can make this happen by taking a snapshot

root@zfs-demo:/pool/data# zfs list NAME USED AVAIL REFER MOUNTPOINT pool 503K 4.81G 67.1K /pool pool/data 272K 4.81G 272K /pool/data root@zfs-demo:/pool/data# zfs snapshot pool/data@2014-09-14 root@zfs-demo:/pool/data# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 511K 4.81G 67.1K /pool pool/data 272K 4.81G 272K /pool/data pool/data@2014-09-14 0 - 272K -

As you can see I created a snapshot of pool/data and named it “2014-09-14” so I’d have a reference as to what it was. Our new snapshot takes up 0 space presently because it is identical to the source data pool/data. You may have noticed that my “zfs list” had a “-t all” appended to it; this is because snapshots are not shown by default with zfs list. Now, let’s mess up the “hosts.txt” and see how things change.

Ruin our important data

root@zfs-demo:/pool/data# cat /var/log/kern.log > ./hosts.txt root@zfs-demo:/pool/data# ls -al total 214 drwxr-xr-x 2 root root 3 Sep 14 23:30 . drwxr-xr-x 3 root root 3 Sep 14 23:22 .. -rw-r--r-- 1 root root 614532 Sep 14 23:40 hosts.txt root@zfs-demo:/pool/data# head hosts.txt -n 50 Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] Initializing cgroup subsys cpuset Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] Initializing cgroup subsys cpu Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] Initializing cgroup subsys cpuacct Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] Linux version 3.13.0-32-generic (buildd@kissel) (gcc version 4.8.2 (Ubuntu 4.8.2-19ubuntu1) ) #57-Ubuntu SMP Tue Jul 15 03:51:08 UTC 2014 (Ubuntu 3.13.0-32.57-generic 3.13.11.4) Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] Command line: BOOT_IMAGE=/boot/vmlinuz-3.13.0-32-generic root=UUID=cf059193-4d41-4e02-896a-d9a2ca03d417 ro Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] KERNEL supported cpus: Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] Intel GenuineIntel Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] AMD AuthenticAMD Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] Centaur CentaurHauls Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] e820: BIOS-provided physical RAM map: Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BIOS-e820: [mem 0x0000000000000000-0x000000000009fbff] usable Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BIOS-e820: [mem 0x000000000009fc00-0x000000000009ffff] reserved Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BIOS-e820: [mem 0x00000000000f0000-0x00000000000fffff] reserved Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BIOS-e820: [mem 0x0000000000100000-0x00000000dfffdfff] usable Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BIOS-e820: [mem 0x00000000dfffe000-0x00000000dfffffff] reserved Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BIOS-e820: [mem 0x00000000feffc000-0x00000000feffffff] reserved Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fffc0000-0x00000000ffffffff] reserved Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BIOS-e820: [mem 0x0000000100000000-0x000000011fffffff] usable Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] NX (Execute Disable) protection: active Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] SMBIOS 2.4 present. Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] DMI: Bochs Bochs, BIOS Bochs 01/01/2011 Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] Hypervisor detected: KVM Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] e820: update [mem 0x00000000-0x00000fff] usable ==> reserved Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] e820: remove [mem 0x000a0000-0x000fffff] usable Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] No AGP bridge found Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] e820: last_pfn = 0x120000 max_arch_pfn = 0x400000000 Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] MTRR default type: write-back Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] MTRR fixed ranges enabled: Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] 00000-9FFFF write-back Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] A0000-BFFFF uncachable Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] C0000-FFFFF write-protect Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] MTRR variable ranges enabled: Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] 0 base 00E0000000 mask FFE0000000 uncachable Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] 1 disabled Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] 2 disabled Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] 3 disabled Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] 4 disabled Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] 5 disabled Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] 6 disabled Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] 7 disabled Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] PAT not supported by CPU. Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] e820: last_pfn = 0xdfffe max_arch_pfn = 0x400000000 Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] found SMP MP-table at [mem 0x000f0b10-0x000f0b1f] mapped at [ffff8800000f0b10] Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] Scanning 1 areas for low memory corruption Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] Base memory trampoline at [ffff880000099000] 99000 size 24576 Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] init_memory_mapping: [mem 0x00000000-0x000fffff] Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] [mem 0x00000000-0x000fffff] page 4k Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BRK [0x01fdf000, 0x01fdffff] PGTABLE Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BRK [0x01fe0000, 0x01fe0fff] PGTABLE Aug 18 21:28:35 zfs-demo kernel: [ 0.000000] BRK [0x01fe1000, 0x01fe1fff] PGTABLE

Oh no, some foolish user or process has written over my hosts.txt with the kernel log. If it wasn’t for my snapshot the data would be lost! What does the snapshot look like now?

root@zfs-demo:/pool/data# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 725K 4.81G 67.1K /pool pool/data 472K 4.81G 222K /pool/data pool/data@2014-09-14 250K - 272K -

So now it looks like the snapshot takes up 250k which is the entire MVP hosts file compressed using ZFS lz4 compression.

Accessing our per-catastrophe data

ZFS stores snapshots in a hidden .zfs directory. Without any special steps we can access a read-only version of our old hosts files as it was before we overwrote it.

root@zfs-demo:/pool/data# head /pool/data/.zfs/snapshot/2014-09-14/hosts.txt # This MVPS HOSTS file is a free download from: # # http://winhelp2002.mvps.org/hosts.htm # # # # Notes: The Operating System does not read the "#" symbol # # You can create your own notes, after the # symbol # # This *must* be the first line: 127.0.0.1 localhost # # # #**********************************************************# # --------------- Updated: August-20-2014 ---------------- # #**********************************************************#

So let’s recover our data and get rid of the snapshot.

root@zfs-demo:/pool/data# cat /pool/data/.zfs/snapshot/2014-09-14/hosts.txt > /pool/data/hosts.txt root@zfs-demo:/pool/data# zfs destroy pool/data@2014-09-14 root@zfs-demo:/pool/data# zfs list NAME USED AVAIL REFER MOUNTPOINT pool 507K 4.81G 67.1K /pool pool/data 272K 4.81G 272K /pool/data root@zfs-demo:/pool/data# head /pool/data/hosts.txt # This MVPS HOSTS file is a free download from: # # http://winhelp2002.mvps.org/hosts.htm # # # # Notes: The Operating System does not read the "#" symbol # # You can create your own notes, after the # symbol # # This *must* be the first line: 127.0.0.1 localhost # # # #**********************************************************# # --------------- Updated: August-20-2014 ---------------- # #**********************************************************# root@zfs-demo:/pool/data# zfs destroy pool/data@2014-09-14

All done!

This is a big deal

ZFS snapshots have the following important benefits

- They only take up the space you need them to

- Their creation takes seconds

- Recovering from a snapshot happens as fast as you can read/write the data without any setup

- Their creation can be automated

While the above example is a simple dataset the process would be identical and equally efficient if we were working with terrabytes of user files or virtual machine disk files.

Offsite backup using zfs send with snapshots

Most of the time I feel safe calling my local snapshots “backups” but in the instance of total system failure it wouldn’t be safe to make that assumption. The entirety of a ZFS snapshot can be exported into a file or TCP data stream via the zfs send command.

Sending snapshot data

root@zfs-demo:/pool/data# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 507K 4.81G 67.1K /pool pool/data 272K 4.81G 272K /pool/data root@zfs-demo:/pool/data# zfs snapshot pool/data@send1 root@zfs-demo:/pool/data# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 511K 4.81G 67.1K /pool pool/data 272K 4.81G 272K /pool/data pool/data@send1 0 - 272K - root@zfs-demo:/pool/data# zfs send pool/data@send1 > /tmp/send1 root@zfs-demo:/pool/data# ls -al /tmp total 564 drwxrwxrwt 2 root root 4096 Sep 15 00:38 . drwxr-xr-x 23 root root 4096 Sep 14 23:20 .. -rw-r--r-- 1 root root 567216 Sep 15 00:38 send1

The above example is the simplest form of sending a snapshot. I have exported everything that is contained in /pool/data@send1 to a single file in my /tmp folder. Since /tmp in this install is an ext4 file system the data takes up 553k uncompressed instead of the 272k it took up while inside of ZFS.

Receiving a ZFS snapshot into a new file system

Now that I have my snapshot any equal ZFS file system would be capable of importing it. Below is an example doing this locally on the same machine.

root@zfs-demo:/pool/data# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 514K 4.81G 67.1K /pool pool/data 272K 4.81G 272K /pool/data pool/data@send1 0 - 272K - root@zfs-demo:/pool/data# zfs recv pool/data2 < /tmp/send1 root@zfs-demo:/pool/data# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 805K 4.81G 67.1K /pool pool/data 272K 4.81G 272K /pool/data pool/data@send1 0 - 272K - pool/data2 272K 4.81G 272K /pool/data2 pool/data2@send1 0 - 272K - root@zfs-demo:/pool/data# ls -al /pool/data2 total 214 drwxr-xr-x 2 root root 3 Sep 14 23:30 . drwxr-xr-x 4 root root 4 Sep 15 00:52 .. -rw-r--r-- 1 root root 511276 Sep 15 00:09 hosts.txt

I have now manually imported everything in my @send1 snapshot into a new dataset called “data2”. The reason this is significant is because it can be done while the sending file system is online with very little performance impact and it can be done over TCP streams.

More advanced send and receive operations

While the above example is a great proof of concept there are a few other valuable techniques to be considered when sending a snapshot.

Preparing a dataset to be a reliable backup location

Now that I have my base snapshot received in /pool/data2 we can assume changes are going to take place. The dataset /pool/data2 can be kept in line with /pool/data by sending incremental snapshots of only what has changed since the last send. This requires three things

- The source file system needs to have the first snapshot as a reference to generate what’s changed

- The destination file system needs to be the same as the snapshot starting point

- The ZFS send operation needs to include two snapshots — a from and to — using the “-i” parameter

Now that our destination dataset has been established and we intend to use it as a backup location let’s make sure it can not be modified.

root@zfs-demo:/# zfs get readonly NAME PROPERTY VALUE SOURCE pool readonly off default pool/data readonly off default pool/data@send1 readonly - - pool/data2 readonly off local pool/data2@send1 readonly - - root@zfs-demo:/# zfs unmount pool/data2 root@zfs-demo:/# zfs set readonly=on pool/data2 root@zfs-demo:/# zfs mount -a root@zfs-demo:/# zfs get readonly NAME PROPERTY VALUE SOURCE pool readonly off default pool/data readonly off default pool/data@send1 readonly - - pool/data2 readonly on local pool/data2@send1 readonly - -

Note that here I took the precaution of unmounting/remounting pool/data2 otherwise your linux mount will remain RW until the next mount.

Sending a ZFS incremental snapshot

In order to send an incremental snapshot we need some changes on the source system that warrant a zfs send.

root@zfs-demo:/# cat /var/log/kern.log >> /pool/data/hosts.txt root@zfs-demo:/# ls -al /pool/data total 369 drwxr-xr-x 2 root root 3 Sep 14 23:30 . drwxr-xr-x 4 root root 4 Sep 15 00:52 .. -rw-r--r-- 1 root root 1125808 Sep 15 16:49 hosts.txt

Now rather than overwriting our hosts.txt we have appended the kern.log to the end of it. Since we want to keep data2 in sync with this let’s generate and send a snapshot of the changes.

root@zfs-demo:/# zfs list -t snapshot NAME USED AVAIL REFER MOUNTPOINT pool/data@send1 90.7K - 272K - pool/data2@send1 42.2K - 272K - root@zfs-demo:/# zfs snapshot pool/data@send2 root@zfs-demo:/# zfs send -i pool/data@send1 pool/data@send2 | zfs recv pool/data2 cannot receive incremental stream: destination pool/data2 has been modified since most recent snapshot warning: cannot send 'pool/data@send2': Broken pipe

It turns out that our destination file system is in fact different than our first snapshot! This is because ZFS keeps track of all access with a timestamp so our ls actually changed pool/data2. You can avoid this in file systems — and should for pools that back replication — by setting the zfs variable “atime=off”. However, that doesn’t help us right now, but this does:

root@zfs-demo:/# zfs rollback pool/data2@send1

ZFS rollback is a procedure you can perform that puts a file system back to exactly how it was at the time a snapshot was taken. This feature is powerful but also dangerous so if you ever use it to reverse something like our corruption example earlier in this article you need to make certain you are OK with loosing what has been written since.

Now that we have set our data2 dataset back to the same as our starting snapshot we can perform our incremental send

root@zfs-demo:/# zfs send -i pool/data@send1 pool/data@send2 | zfs recv pool/data2 root@zfs-demo:/# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 1.33M 4.81G 69.6K /pool pool/data 518K 4.81G 427K /pool/data pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data2 518K 4.81G 427K /pool/data2 pool/data2@send1 90.7K - 272K - pool/data2@send2 0 - 427K -

As you can see our send worked properly this time. Now that we have “readonly=on” set for pool/data2 this will never happen again.

ZFS snapshot send over SSH

Everything we have done so far has been local. However, the ideal use of a ZFS send is to transport data to another location. If you want to send data securely your best bet is to send it via SSH. Let’s take another snapshot and send it via SSH.

root@zfs-demo:/# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 1.33M 4.81G 69.6K /pool pool/data 518K 4.81G 427K /pool/data pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data2 518K 4.81G 427K /pool/data2 pool/data2@send1 90.7K - 272K - pool/data2@send2 0 - 427K - root@zfs-demo:/# zfs snapshot pool/data@send3 root@zfs-demo:/# zfs send -i pool/data@send2 pool/data@send3 | ssh root@localhost zfs recv pool/data2 root@localhost's password: root@zfs-demo:/# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 1.37M 4.81G 69.6K /pool pool/data 518K 4.81G 427K /pool/data pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data@send3 0 - 427K - pool/data2 520K 4.81G 427K /pool/data2 pool/data2@send1 90.7K - 272K - pool/data2@send2 2.48K - 427K - pool/data2@send3 0 - 427K -

In the example above I have sent the delta between @send2 and @send3 over SSH. For automated sends over SSH it makes sense to use a key instead to avoid the password prompt. While this example was to localhost it works the same with any destination.

ZFS snapshot send over netcat

If you’re sending to a location where you don’t need encryption between the two points the overhead of SSH is something to consider. Personally I have found that netcat sends data at least twice as fast as SSH. When sending a base snapshot of a large data set I will typically use netcat.

Using netcat is a little more complicated because you need to two perform two steps

- Setup a netcat listener on your receiving system that points to a waiting zfs receive

- Initiate your send via zfs send through netcat

On the receiver

root@zfs-demo:~# nc -w 300 -l -p 2020 | zfs recv pool/data2

The above command tells netcat to wait for 300 seconds on port 2020 and run the zfs receive command against pool/data2.

On the sender

root@zfs-demo:/# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 1.38M 4.81G 69.6K /pool pool/data 518K 4.81G 427K /pool/data pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data@send3 0 - 427K - pool/data2 520K 4.81G 427K /pool/data2 pool/data2@send1 90.7K - 272K - pool/data2@send2 2.48K - 427K - pool/data2@send3 0 - 427K - root@zfs-demo:/# zfs snapshot pool/data@send4 root@zfs-demo:/# zfs send -i pool/data@send3 pool/data@send4 | nc -w 20 127.0.0.1 2020

We create our new snapshot and send the delta into our netcat. Once again, if you’re using netcat you should expect

- Around double the speed of your SSH send

- Your data will be unencrypted so technically someone could sniff it and potentially put something together

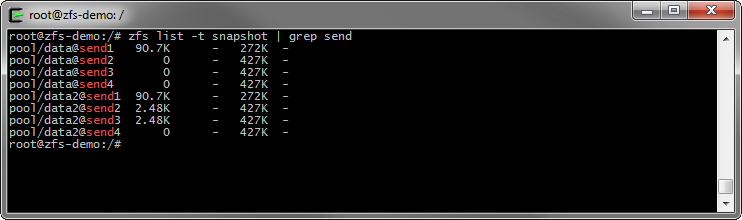

Sending entire datasets

The most likely situation is that you have a production server that has been around for a while with tons of snapshots and now you want to send that data to a backup server. Luckily ZFS makes this very simple! Let’s destroy our pool/data2 to show by example how easy it is.

root@zfs-demo:/# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 1.45M 4.81G 69.6K /pool pool/data 518K 4.81G 427K /pool/data pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data@send3 0 - 427K - pool/data@send4 0 - 427K - pool/data2 523K 4.81G 427K /pool/data2 pool/data2@send1 90.7K - 272K - pool/data2@send2 2.48K - 427K - pool/data2@send3 2.48K - 427K - pool/data2@send4 0 - 427K - root@zfs-demo:/# zfs destroy pool/data2 cannot destroy 'pool/data2': filesystem has children use '-r' to destroy the following datasets: pool/data2@send4 pool/data2@send3 pool/data2@send1 pool/data2@send2 root@zfs-demo:/# zfs destroy -r pool/data2 root@zfs-demo:/# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 831K 4.81G 67.1K /pool pool/data 518K 4.81G 427K /pool/data pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data@send3 0 - 427K - pool/data@send4 0 - 427K -

So now we don’t have pool/data2 anymore. As you can see above ZFS tries to protect you from accidentally destroying datasets that have resident snapshots — and potentially clones which we will discuss later –. The -r option allows you to recursively destroy everything.

To get pool/data and all of its’ snapshots on a remote data source we can simply run the command below.

root@zfs-demo:/# zfs send -v -R pool/data@send4 | ssh root@localhost zfs recv pool/data2 send from @ to pool/data@send1 estimated size is 529K send from @send1 to pool/data@send2 estimated size is 779K send from @send2 to pool/data@send3 estimated size is 0 send from @send3 to pool/data@send4 estimated size is 0 total estimated size is 1.28M TIME SENT SNAPSHOT 17:47:23 40.7K pool/data@send1 17:47:24 40.7K pool/data@send1 root@zfs-demo:/# zfs list -t all NAME USED AVAIL REFER MOUNTPOINT pool 1.44M 4.81G 69.6K /pool pool/data 518K 4.81G 427K /pool/data pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data@send3 0 - 427K - pool/data@send4 0 - 427K - pool/data2 523K 4.81G 427K /pool/data2 pool/data2@send1 90.7K - 272K - pool/data2@send2 2.48K - 427K - pool/data2@send3 2.48K - 427K - pool/data2@send4 0 - 427K -

By using ZFS -R we can send a snapshot and every snapshot leading up to it. You may also have noticed I added -v which is very useful for long sends because you can view the progress. While we could have simply sent pool/data@send4 without -R we would then be missing send 1/2/3 on our destination dataset so it all depends on what you want.

Why this is better than rsync

While rsync is a great tool that I still use constantly ZFS send is better suited for large scale backups because:

- There is almost no CPU overhead because there is no need to run compares

- ZFS send happens at the block level and is independent of file systems

- ZFS send is based off of snapshots which means it is a reliable atomic set of data

- ZFS send can be run on active data without concern over locks or conflicting changes

Destroying snapshots

On active datasets snapshots will grow as they become more and more different from the current dataset. Eventually you’ll want to get rid of a snapshot. Removing snapshots is very simple using the “zfs destroy” command.

root@zfs-demo:/# zfs list -t snapshot NAME USED AVAIL REFER MOUNTPOINT pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data@send3 0 - 427K - pool/data@send4 0 - 427K - pool/data2@send1 90.7K - 272K - pool/data2@send2 2.48K - 427K - pool/data2@send3 2.48K - 427K - pool/data2@send4 0 - 427K - root@zfs-demo:/# zfs snapshot pool/data@notforlong root@zfs-demo:/# zfs list -t snapshot NAME USED AVAIL REFER MOUNTPOINT pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data@send3 0 - 427K - pool/data@send4 0 - 427K - pool/data@notforlong 0 - 427K - pool/data2@send1 90.7K - 272K - pool/data2@send2 2.48K - 427K - pool/data2@send3 2.48K - 427K - pool/data2@send4 0 - 427K - root@zfs-demo:/# zfs destroy pool/data@notforlong root@zfs-demo:/# zfs list -t snapshot NAME USED AVAIL REFER MOUNTPOINT pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data@send3 0 - 427K - pool/data@send4 0 - 427K - pool/data2@send1 90.7K - 272K - pool/data2@send2 2.48K - 427K - pool/data2@send3 2.48K - 427K - pool/data2@send4 0 - 427K -

Be careful when using the zfs destroy command as it is the same for datasets, snapshots and clones.

ZFS clones

So far every time we’ve worked with a ZFS snapshot it is been a backup source for versions of data which are read only. However, ZFS clones change that by giving you the power to make read/write replicas of datasets based on snapshots.

Example use case

Let’s say you have a dataset that holds all your production virtual machine disk files and now it’s time to test a roll-out of a new product version. Normally you’d have to create a duplicate copy of all the machines you want to test on. This would take time to copy and extra disk space where those copies reside. However, with a ZFS clone you can instantly have a duplicate read/write version of all of your machines that takes up zero space and time to create.

root@zfs-demo:/# zfs list -t snapshot NAME USED AVAIL REFER MOUNTPOINT pool/data@send1 90.7K - 272K - pool/data@send2 0 - 427K - pool/data@send3 0 - 427K - pool/data@send4 0 - 427K - pool/data2@send1 90.7K - 272K - pool/data2@send2 2.48K - 427K - pool/data2@send3 2.48K - 427K - pool/data2@send4 0 - 427K - root@zfs-demo:/# zfs clone pool/data@send4 pool/myclone root@zfs-demo:/# zfs list NAME USED AVAIL REFER MOUNTPOINT pool 1.69M 4.81G 72.0K /pool pool/data 518K 4.81G 427K /pool/data pool/data2 523K 4.81G 427K /pool/data2 pool/myclone 2.48K 4.81G 427K /pool/myclone root@zfs-demo:/# ls -al /pool/myclone total 369 drwxr-xr-x 2 root root 3 Sep 14 23:30 . drwxr-xr-x 5 root root 5 Sep 15 18:42 .. -rw-r--r-- 1 root root 1125808 Sep 15 16:49 hosts.txt

Now I have a dataset called /pool/myclone that I can do whatever I want to without consequences to any other file systems. Clones rely on the snapshots they are created from so before you make a clone be certain the snapshot it is based on will be one that is going to stick around until you’re done with the clone.

Removing a clone is as simple as removing a snapshot.

root@zfs-demo:/# zfs list NAME USED AVAIL REFER MOUNTPOINT pool 1.69M 4.81G 72.0K /pool pool/data 518K 4.81G 427K /pool/data pool/data2 523K 4.81G 427K /pool/data2 pool/myclone 2.48K 4.81G 427K /pool/myclone root@zfs-demo:/# zfs destroy pool/myclone root@zfs-demo:/# zfs list NAME USED AVAIL REFER MOUNTPOINT pool 1.46M 4.81G 72.0K /pool pool/data 518K 4.81G 427K /pool/data pool/data2 523K 4.81G 427K /pool/data2

Automating snapshots

There are three easy ways you can automate your ZFS snapshots

- Use the ZoL zfs-autosnapshot tool

- If you elect to use Napp-it setup auto-snaps using the GUI

- Use a bash script

Personally I have elected to use a bash script as I prefer CLI over napp-it and started using my script before auto-snaps had all the options I wanted. If you wish to use my script here it is:

#! #---- Example crontab # SHELL=/bin/bash #-- Custom snapshot call works like this # snapshot.sh (pool you want) (filesystem you want) (unique name) (unique id, incase we have same name twice) (how many to keep) ## Snapshots for pool/data (example setup for pool/data) #0,15,30,45 * * * * /var/scripts/zfs/snapshot.sh pool data minutes 100 5 #0 * * * * /var/scripts/zfs/snapshot.sh pool data hourly 101 24 #45 23 * * * /var/scripts/zfs/snapshot.sh pool data daily 102 31 #45 23 * * 5 /var/scripts/zfs/snapshot.sh pool data weekly 103 7 #45 23 1 * * /var/scripts/zfs/snapshot.sh pool data monthly 104 12 #45 23 31 12 * /var/scripts/zfs/snapshot.sh pool data yearly 105 2 ## --- The above setup would keep five 15 minute intervals, roll that into 24 hourly intervals, roll that into 31 daily, then 7 weekly, then 12 monthly, then 2 yearly #-- User settings for snapshots called from command line pool=$1 filesystem=$2 name=$3 jobid=$4 keep=$5 ## Get timestamped name for new snapshot snap="$(echo $pool)/$(echo $filesystem)@$(echo $name)-$(echo $jobid)_$(date +%Y).$(date +%m).$(date +%d).$(date +%H).$(date +%M).$(date +%S)" ## Create snapshot (which includes user settings) zfs snapshot $snap ## Get full list of snapshots that fall under user specified group snaps=( $(zfs list -o name -t snapshot | grep $pool/$filesystem@$name-$jobid) ) ## Count how many snaps we have total under their grep elements=${#snaps[@]} ## Mark where to stop, based on how many they want to keep stop=$((elements-keep)) ## Delete every snap that they don't want to keep for (( i=0; i<stop; i++ )) do zfs destroy ${snaps[$i]} done

As suggested in the comments I place it in my crontab. My production use is identical to the commented version.

Conclusion

In my production systems ZFS snapshots and clones play a major role for data integrity and testing. If I tried to calculate the amount of hours these two functions have saved I’d probably come up short. There are dozens of options surrounding snapshot, clone and send that I did not dive into in this article. As always “man zfs” is your friend.

Thanks

- Mark Slatem for his easy to follow post on ZFS send over netcat http://blog.smartcore.net.au/fast-zfs-send-with-netcat/

- The ZFS on Linux community for providing a fantastic storage system to Linux http://zfsonlinux.org/

Mark,

Nice write up but please fix your two script samples (HTML less than syntax)

fs recv pool/data2 < /tmp/send1

and

fs recv pool/data2 < /tmp/send1

Cheers

Jon

Thanks for noticing that Jon! I have corrected the syntax issue, one of these days I need to edit the WordPress function so touching the visual editor on a post doesn’t do that.

Mark,

I noticed there is an undocumented (in older distros) but functional argument ‘split’ to ‘zpool’ e.g. ‘zpool split’ that can be used

to clone/split/rename ZFS mirrors. I talked about this recently in a post to http://wiki.openindiana.org/oi/How+to+migrate+the+root+pool – yet I haven’t tested the entire procedure – nor have I tested my proposal below.

I’m just thinking in the event of LARGE mirrored data sets perhaps an initial ‘clone’ via the following might be prudent.

zpool attach pool_name_ _existing_mirror_member_ _new_mirror_member_

zpool status _pool_name_

# wait until resilver is done

zpool split _pool_name_ _pool_backup_name_ _new_mirror_member_

Would be ideal for a ‘BULK’ backup on a 4TB, 6TB, or 8TB mirror where you could then move the disk to the remote

system rename the pool (on the remote system – note, rpools require more work if you want them bootable) via:

zpool import -f _pool_backup_name_ _pool_name_

And then start using your method for automating snapshots

BR

Jon

Thanks so much. Helped me optimize a crucial project , You a true experimenter and tester.

This was extremely helpful for me getting to understand zfs replication and how to maintain them. Thank you!

Very helpful instructions. Thank you very much.

But tinkering with “ZFS snapshot send over netcat” is not mine.

I love to use hpnssh https://sourceforge.net/projects/hpnssh/ in my private WAN (OPENVPN over a up to 10Mbit line).

It’s a replacement of ssh with much better throughput. If installed on both sites you can disable encryption too if you really like to do that. Only use optional additional switches, like this.

ssh -C -oNoneSwitch=yes -oNoneEnabled=yes userid@…

No need for netcat anymore :)

This script might be helpfull: https://github.com/psy0rz/zfs_autobackup/blob/master/README.md

Overview of Snapshots

However, the longer a snapshot lives and becomes less ~~out of~~ **in** sync with its’ parent the more space it may take up as it could hold data that no longer exists elsewhere.